Discord and GeForce NOW: Revolutionizing In-App Gaming

Introduction to a New Gaming Era Discord, the go-to platform for gamers and communities worldwide, has taken a monumental step forward by integrating NVIDIA’s GeForce NOW cloud gaming service. Announced at Gamescom 2025, this partnership allows users to play high-quality games directly within Discord, eliminating the need for downloads, installations, or external launchers. With Fortnite as the flagship title for this rollout, the collaboration promises to redefine how gamers connect, play, and discover new titles, all within the familiar Discord interface. How It Works: Seamless Integration The integration leverages NVIDIA’s Graphics Delivery Network (GDN), enabling users to launch games instantly within Discord’s voice or text channels. During a closed-door demo at Gamescom 2025, attendees experienced Fortnite streaming at up to 1440p resolution and 60 frames per second (FPS), showcasing smooth performance even on low-spec devices. This feature is designed to make gaming more accessible, as players can jump into sessions without worrying about hardware limitations or lengthy setup processes. The system also supports cross-platform play, ensuring that friends on different devices can game together effortlessly. The Power Behind the Scenes: Blackwell Architecture At the core of this integration is NVIDIA’s cutting-edge Blackwell architecture, which powers the upgraded GeForce NOW service. Starting in September 2025, GeForce NOW will roll out RTX 5080-class GPUs, boasting 62 teraflops of compute power, a 48GB frame buffer, and 2TB/s of memory bandwidth. This upgrade enables streaming at resolutions up to 5K at 120 FPS, with a new Cinematic Quality Streaming (CQS) mode that enhances visual fidelity for an immersive experience. The architecture ensures low latency and high performance, making cloud gaming feel as responsive as local play. Enhancing Social Gaming Discord’s 2025 user data highlights the platform’s role in social gaming: 72% of its 150 million monthly active users play games with friends weekly, and group sessions last seven times longer than solo play. The GeForce NOW integration capitalizes on this by embedding gaming directly into Discord’s social framework. For example, users can initiate a 30-minute Fortnite trial within a voice channel, inviting friends to join instantly. This feature fosters spontaneous gaming sessions, making it easier for communities to connect over shared gaming experiences. Additionally, a limited-time free trial of GeForce NOW’s Performance tier will be offered, requiring only an Epic Games account to start playing, lowering the barrier to entry. Expanding the Game Library with Install-to-PlayOne of the standout features of this integration is the “Install-to-Play” functionality, which allows users to access their existing PC game libraries on GeForce NOW without re-installing titles for each session. This feature will double the GeForce NOW library to over 4,500 titles, including popular games from platforms like Steam, Epic Games Store, and Xbox. By saving game progress in the cloud, players can pick up where they left off, creating a seamless experience across devices. This is particularly appealing for Discord users who already manage their gaming communities and libraries within the platform. What’s Next: The Future of Discord Gaming While the Gamescom 2025 demo was a promising first look, NVIDIA and Discord have not yet announced an official release date for the full integration. However, the partnership is expected to evolve rapidly, with plans to support additional titles and features. The integration aligns with Discord’s broader mission to be more than just a communication tool, positioning it as a central hub for gaming, social interaction, and content discovery. Future updates may include deeper integration with Discord’s Activity and Shop features, allowing users to discover and purchase games directly within the app. Challenges and Considerations Despite the excitement, there are challenges to address. The success of this integration depends on reliable internet connections, as cloud gaming requires stable, high-speed bandwidth to minimize latency. Additionally, while the free trial makes the service accessible, the full GeForce NOW experience may require a paid subscription for extended playtime or premium features, details of which are yet to be disclosed. Discord and NVIDIA will need to ensure that the user experience remains intuitive and inclusive to maintain the platform’s broad appeal. Conclusion The partnership between Discord and GeForce NOW marks a significant milestone in the evolution of cloud gaming. By combining Discord’s social platform with NVIDIA’s powerful cloud infrastructure, this integration offers a glimpse into the future of gaming, where accessibility, performance, and community converge. As the rollout progresses, gamers can look forward to a more connected and seamless experience, with Discord poised to become a one-stop destination for both communication and play.

Aug 20

The quickest way to fix Getsockopt Minecraft error

The "Connection Timed Out: getsockopt" error in Minecraft is a common network-related issue that prevents players from connecting to servers or LAN games. This guide provides step-by-step solutions to resolve the error, focusing on firewall settings, network configurations, and other troubleshooting methods. Follow these instructions to get back to playing Minecraft seamlessly. Understanding the Getsockopt Error The getsockopt error occurs when Minecraft fails to establish a connection with a server due to network issues, such as firewall restrictions, misconfigured ports, or DNS problems. It's often accompanied by the message "Connection Timed Out: getsockopt". This error typically arises in Windows environments and is related to Java executable prompts being blocked by security settings. Quick Fixes to Try First Before diving into advanced solutions, try these simple steps to resolve the issue quickly. Restart Minecraft and Your Network 1. Close Minecraft completely and relaunch it. 2. Check your internet connection by loading a website or playing another online game. 3. Restart your router: Unplug it for 30 seconds, then plug it back in. Ensure Matching Game Versions 1. Confirm that you and your friends are using the same Minecraft version. 2. For LAN games, ensure all players are on the same network. Adjusting Firewall Settings The most common cause of the getsockopt error is Windows Defender Firewall blocking Minecraft’s Java executable. Follow these steps to allow Minecraft through the firewall. Allow Minecraft and Java Through Firewall 1. Open the Start menu and search for Windows Defender Firewall. 2. Select Allow an app or feature through Windows Defender Firewall. 3. Click Change Settings (admin privileges required). 4. Locate Java-related entries like Javaw.exe or Java Platform SE Binary. Check both Private and Public boxes next to them. 5. If Minecraft isn’t listed, click Allow another app, then Browse, and add Minecraft.exe and MinecraftLauncher.exe. 6. Save changes and test the connection. Note: Turning off the firewall entirely is not recommended, as it leaves your system vulnerable. Allowing specific apps is safer. Exclude Minecraft from Windows Defender For Windows 10/11 users, excluding Minecraft from Windows Defender can help. 1. Go to Start > Settings > Update & Security > Windows Security > Virus & Threat Protection. 2. Under Virus & Threat Protection Settings, click Manage Settings. 3. Scroll to Exclusions and select Add or remove exclusions. 4. Add the Minecraft installation folder. 5. Test the connection again. Configuring Network Settings If firewall adjustments don’t work, network misconfigurations may be the culprit. Try these solutions. Change DNS Settings Switching to Google’s public DNS can improve connection stability. 1. Open Control Panel > Network and Internet > Network and Sharing Center. 2. Click Change adapter settings. 3. Right-click your active connection (Ethernet or Wi-Fi) and select Properties. 4. Select Internet Protocol Version 4 (TCP/IPv4) and click Properties. 5. Set the following DNS servers: - Preferred DNS: 8.8.8.8 - Alternate DNS: 8.8.4.4 6. Save changes and restart your computer. Set Up Port Forwarding If you’re hosting a server, ensure port 25565 is open. 1. Log into your router (usually via 192.168.1.1 or 192.168.0.1). 2. Find the Port Forwarding or Virtual Servers section. 3. Add a new rule: - Service Port: 25565 - Internal Port: 25565 - IP Address: Your computer’s local IP (find it using ipconfig in Command Prompt). - Protocol: TCP/UDP 4. Save and restart your router. 5. Verify the port is open using tools like netstat or online port checkers. Advanced Troubleshooting If the error persists, try these additional steps. Check for Antivirus Interference Antivirus software may block Minecraft’s connections. 1. Temporarily disable your antivirus or add Minecraft and Java to its exceptions list. 2. If the error resolves, configure your antivirus to allow Minecraft.exe and Javaw.exe permanently. Update Java and Minecraft 1. Ensure you have the latest Java version (e.g., Java 21 for Minecraft 1.20.4+). Download it from java.com. 2. Update Minecraft to the latest version via the launcher. Check Server Status 1. If connecting to a public server, check if it’s online using sites like minecraftstatus.net. 2. For hosted servers, confirm the server is running and not in maintenance. Contact the host’s support if needed. When to Contact Support If none of the above solutions work: 1. File a bug report on Mojang’s Bug Tracker at bugs.mojang.com. 2. Contact Mojang Support via the official Minecraft website. 3. For third-party servers, reach out to the server admin or hosting provider. Conclusion The getsockopt error in Minecraft is typically caused by firewall blocks, network misconfigurations, or outdated software. Start with firewall adjustments, as they resolve the issue for most players. If that fails, systematically try DNS changes, port forwarding, and advanced troubleshooting. With these steps, you’ll likely be back in your Minecraft world in no time. Happy gaming!

Aug 19

The quickest way to fix React CORS errors

Introduction Cross-Origin Resource Sharing (CORS) errors occur in React applications when a browser blocks requests to a different domain, protocol, or port due to security policies. The error typically appears as "No 'Access-Control-Allow-Origin' header is present on the requested resource." This article details how to diagnose and resolve CORS issues in React apps, with practical solutions for development and production environments. Understanding CORS in React CORS errors arise when a React frontend (e.g., running on localhost:3000) tries to fetch data from an API on a different origin (e.g., api.example.com). The browser enforces the Same-Origin Policy, requiring the server to include specific headers to allow cross-origin requests. Causes of CORS Errors Server does not include Access-Control-Allow-Origin header for the client's domain. Mismatched protocols (e.g., HTTP frontend requesting HTTPS API). Local development requests hitting a production server. ncorrectly configured API endpoints or headers. Solutions for CORS Errors in React Server-Side Configuration The most reliable fix is to configure the server to allow requests from your React app's origin. Steps: For Node.js/Express servers, install the cors package: npm install cors Add middleware to allow specific origins: const cors = require('cors'); app.use(cors({ origin: 'http://localhost:3000' })); For production, update the origin to your deployed frontend URL (e.g., 'https://your-app.com'). Verify headers using browser DevTools (Network tab) to ensure Access-Control-Allow-Origin matches your frontend's origin. For APIs you don't control, check their documentation for CORS support or contact the provider. Proxy Setup in Development React's development server supports proxying to bypass CORS during development. Steps: Install http-proxy-middleware: npm install http-proxy-middleware Create src/setupProxy.js in your React project: const { createProxyMiddleware } = require('http-proxy-middleware'); module.exports = function(app) { app.use( '/api', createProxyMiddleware({ target: 'https://api.example.com', changeOrigin: true, }) ); }; Update API calls to use relative paths (e.g., fetch('/api/data') instead of fetch('https://api.example.com/data')). Restart the development server to apply changes. Note: This is a development-only solution and does not work in production. Using Fetch with CORS Mode Ensure your Fetch requests in React are configured correctly. Steps: Add { mode: 'cors' } to Fetch options: fetch('https://api.example.com/data', { mode: 'cors' }) .then(response => response.json()) .then(data => console.log(data)) .catch(error => console.error('CORS error:', error)); If the server doesn't support CORS, this won't resolve the issue, but it ensures the request signals CORS intent. For POST requests, include headers like Content-Type: 'application/json' and verify server accepts them via Access-Control-Allow-Methods. JSONP as a Fallback (Limited Use) For GET requests to APIs lacking CORS support, JSONP can be a workaround, though it's outdated and less secure. Steps: Use a library like jsonp: npm install jsonp Example: import jsonp from 'jsonp'; jsonp('https://api.example.com/data?callback=?', (err, data) => { if (err) console.error(err); else console.log(data); }); Avoid for sensitive data due to security risks (e.g., script injection). Only use when other solutions are not feasible. Handling Errors Gracefully Improve user experience by catching and handling CORS errors in your React app. Steps: Wrap API calls in try-catch: async function fetchData() { try { const response = await fetch('https://api.example.com/data'); if (!response.ok) throw new Error('Network response was not ok'); const data = await response.json(); return data; } catch (error) { console.error('CORS or network error:', error); alert('Failed to fetch data. Please try again later.'); } } Display user-friendly messages using components like Toast or Modal from libraries like Material-UI. Log errors to monitoring tools like Sentry for debugging. Testing and Debugging Open DevTools > Network tab, reproduce the request, and check for CORS-related headers or errors. Use tools like Postman to test API endpoints independently. If the API works in Postman but fails in the browser, confirm CORS headers are correctly set. Test in different browsers, as some (e.g., Firefox) provide clearer CORS error messages. Production Considerations Deploy your frontend and backend on the same domain (e.g., app.example.com and api.example.com) to minimize CORS issues. Use a reverse proxy (e.g., Nginx) to route requests under the same origin. Ensure HTTPS is enabled for both frontend and backend to avoid mixed content errors. Update CORS headers dynamically for multiple allowed origins in production: app.use(cors({ origin: (origin, callback) => { const allowedOrigins = ; if (!origin || allowedOrigins.includes(origin)) { callback(null, true); } else { callback(new Error('Not allowed by CORS')); } }})); Best Practices Always configure CORS on the server when possible, as client-side workarounds are less reliable. Use environment variables for API URLs to switch between development and production easily. Monitor CORS errors in production with tools like Sentry or LogRocket. Write unit tests for API calls using Jest and msw to mock responses and test error handling. Educate users with clear error messages instead of letting requests fail silently. Conclusion CORS errors in React can be resolved by configuring the server, using proxies in development, or handling errors gracefully. By combining server-side fixes with robust client-side error handling, you can ensure a seamless user experience. Regular testing and monitoring are essential to catch and fix issues early.

Aug 14

Exploring GPT-OSS:OpenAI's Open-Weight Language Model

The release of GPT-OSS by OpenAI represents a pivotal moment in the democratization of artificial intelligence. By offering open-weight models, OpenAI enables developers, researchers, and organizations to harness the power of advanced language models without relying on proprietary APIs. This article delves into the GPT-OSS family, exploring its architecture, performance, deployment options, use cases, and ethical considerations. The GPT-OSS series includes two models: gpt-oss-120b with 117 billion parameters and gpt-oss-20b with 21 billion parameters. These models are designed for tasks ranging from complex reasoning to efficient inference on edge devices, making them versatile tools for both enterprise and individual use. What is GPT-OSS? Background and Purpose GPT-OSS, short for Open Source Software, marks OpenAIs return to open-weight models since the release of GPT-2 in 2019. Released under the Apache 2.0 license, these models, gpt-oss-120b and gpt-oss-20b, are designed to support a wide range of applications, including natural language processing, code generation, and agentic workflows. Unlike fully open-source models, GPT-OSS provides access to model weights, allowing for local deployment and customization while maintaining certain restrictions on commercial use. The release aims to foster innovation by providing researchers and developers with tools to experiment, fine-tune, and deploy AI models without the constraints of cloud-based APIs. Key Features and Capabilities Reasoning Capabilities: Both models support chain-of-thought reasoning with configurable effort levels (low, medium, high), allowing users to balance computational cost and response quality. Mixture-of-Experts Architecture: gpt-oss-120b activates 5.1 billion parameters per token across 128 experts, while gpt-oss-20b activates 3.6 billion, ensuring efficiency without sacrificing performance. Extended Context Window: A 128,000-token context window enables processing of long documents, conversations, or codebases. Quantization Support: Native MXFP4 quantization allows gpt-oss-120b to run on a single 80GB GPU and gpt-oss-20b on devices with as little as 16GB RAM, making it accessible for consumer hardware. Multimodal Potential: While primarily text-based, the models include hooks for future multimodal extensions, such as image processing. Technical Architecture Model Design Both GPT-OSS models are built on a transformer architecture with a Mixture-of-Experts framework. They incorporate alternating dense and sparse attention layers, similar to GPT-3, but optimized for efficiency. The gpt-oss-120b model features 36 layers, 128 experts, and 4 active experts per token, earning the moniker super sparse. The gpt-oss-20b model is leaner, with 24 layers and 64 experts, designed for resource-constrained environments. Both models use Rotary Positional Embedding to support the 128,000-token context window and grouped multi-query attention to reduce memory overhead during inference. Performance Benchmarks gpt-oss-120b: Competes closely with OpenAIs proprietary o4-mini on benchmarks like Codeforces (coding), MMLU (general knowledge), and TauBench (reasoning). It excels in specialized domains, scoring 92 percent on HealthBench for medical queries and outperforming competitors on AIME 2024 and 2025 math problems. gpt-oss-20b: Matches or surpasses o3-mini on similar benchmarks, achieving 85 percent on HealthBench and strong performance on math and coding tasks. Its optimization for edge devices makes it ideal for lightweight applications. These benchmarks highlight the models ability to handle complex tasks while remaining resource-efficient. Deployment Options Local Deployment Running GPT-OSS locally is straightforward with tools like Ollama, LM Studio, or Hugging Faces transformers library. For gpt-oss-20b, use commands to pull and run the model. For gpt-oss-120b, a single H100 GPU or equivalent is required with commands to download and execute the model. Local deployment ensures data privacy and eliminates reliance on cloud services, ideal for sensitive applications. Cloud Deployment Cloud providers like Azure, AWS, and Northflank support GPT-OSS for scalable inference. A sample setup on Northflank involves serving the model with tensor parallelism for high-throughput applications, such as real-time chatbots or automated content generation. For API-based access, xAI offers an API service for GPT-OSS. Visit https://x.ai/api for details. Use Cases and Applications Research and Development Researchers can use GPT-OSS to explore novel AI architectures, fine-tune models for domain-specific tasks, or benchmark against proprietary systems. The open-weight nature allows full access to model parameters, enabling experiments in areas like transfer learning or reinforcement learning. Enterprise Applications Businesses can deploy GPT-OSS for tasks like automated customer support, document summarization, or code review. For example, a company could fine-tune gpt-oss-20b to generate technical documentation from codebases, running it on-premises to ensure data security. Edge Computing The gpt-oss-20b models low resource requirements make it suitable for edge devices, such as IoT systems or mobile applications. For instance, it can power offline chatbots or real-time translation apps on smartphones. Education and Training Educational institutions can leverage GPT-OSS for teaching AI concepts, developing interactive learning tools, or creating personalized tutoring systems. The models ability to handle complex reasoning makes it ideal for generating practice problems or explaining concepts in subjects like mathematics or computer science. Safety and Ethical Considerations Safety Measures OpenAI evaluated gpt-oss-120b under its Preparedness Framework, confirming it does not reach high-risk capability levels in domains like biological, chemical, or cyber threats. However, developers are responsible for implementing safeguards, such as output filtering or user authentication, to prevent misuse in production environments. Fine-Tuning and Customization Fine-tuning is supported for both models. For gpt-oss-20b, consumer hardware can handle fine-tuning with libraries like transformers. For gpt-oss-120b, fine-tuning requires high-end GPUs or cloud infrastructure due to its size. Fine-tuning enables customization for specific domains, such as legal document analysis or medical diagnostics, but developers must ensure ethical use. Ethical Implications The open-weight nature of GPT-OSS raises concerns about potential misuse, such as generating misinformation or malicious code. OpenAI encourages responsible use through community guidelines and recommends monitoring outputs in sensitive applications. Developers should consider ethical implications, such as bias in training data or environmental impact from high-compute deployments. Transparency is key. Developers should disclose when GPT-OSS is used in public-facing applications to maintain trust and accountability. Future Directions Potential Upgrades OpenAI has hinted at future enhancements to GPT-OSS, including multimodal capabilities for processing images or audio and improved quantization for even lower resource requirements. These upgrades could expand the models applicability to fields like computer vision or real-time speech processing. Community Contributions As open-weight models, GPT-OSS benefits from community-driven development. Researchers and developers can contribute to optimizing inference, creating new fine-tuning datasets, or building tools to simplify deployment. OpenAIs GitHub repository for GPT-OSS encourages collaboration under the Apache 2.0 license. Conclusion GPT-OSS empowers the AI community with flexible, high-performance models that bridge the gap between proprietary and open systems. Whether for research, enterprise applications, edge computing, or education, gpt-oss-120b and gpt-oss-20b offer robust solutions for a wide range of tasks. Their open-weight design fosters innovation while requiring responsible stewardship to mitigate risks. For more information on deployment or API access, visit https://x.ai/api.

Aug 6

.png&w=3840&q=75)

Fixing "Cannot Find Module" on Vercel

When deploying your Node.js or Next.js project to Vercel, you may encounter the error: Error: Cannot find module 'your-module-name' This usually means that Vercel couldn't resolve a module you used in your code. This article covers the common causes and how to fix them. 1. Check Module Installation Ensure You Installed the Module If you're using a third-party package (like axios, lodash, etc.), make sure it's installed in your project: npm install your-module-name or yarn add your-module-name Check Your package.json The module must be listed under dependencies, not just devDependencies, especially if it's used in server-side code: "dependencies": { "your-module-name": "^1.0.0" } 2. Re-deploy After Lockfile Update Push Your package-lock.json or yarn.lock Make sure your lockfile is committed to Git. Vercel uses this to install your exact dependencies. If the file is missing or outdated, it may not install the required modules correctly. git add package-lock.json git commit -m "Update lockfile" git push Trigger a Fresh Deploy Go to your Vercel dashboard and trigger a redeploy to apply changes. 3. Check for Case-Sensitive Imports File/Folder Name Mismatch Vercel runs on a Linux-based file system, which is case-sensitive. This means: import myModule from './Utils/MyModule'; will fail if the actual file is named ./utils/myModule.ts. Double-check your casing. 4. Module Path Issues with Monorepos Use baseUrl or paths Correctly If you're using a monorepo or custom module resolution, make sure your tsconfig.json (or jsconfig.json) is correctly configured and that Vercel supports it. Use Relative Paths If Possible To avoid issues, prefer relative paths over custom aliases unless necessary. 5. Vercel Build Settings Custom Install Command In your Vercel project settings, you can set a custom install command under the Build & Development Settings. Ensure it runs npm install or yarn install properly. Set NODE_ENV If Needed Some modules behave differently based on NODE_ENV. Ensure your environment variables are set correctly in the Vercel dashboard. Conclusion The "Cannot find module" error on Vercel is usually related to dependency installation, path resolution, or case sensitivity. By carefully checking these areas, you can ensure a smooth deployment. If the error persists, consult Vercel logs for the full stack trace, or test your build locally using: vercel build to reproduce the issue before deploying.

Jul 24

Latest News

Google and Apple Emergency Security Updates

Overview In December 2025, both Google and Apple released emergency security updates to address actively exploited zero-day vulnerabilities. These flaws were targeted in sophisticated hacking campaigns affecting an unknown number of users. The updates are critical for protecting user privacy and device security. Details on Apple's Response Apple issued updates for iOS 18.7.3 and iPadOS 18.7.3, targeting iPhone XS and later models, as well as various iPad Pro generations. The patches fix two zero-day vulnerabilities that were exploited in an "extremely sophisticated attack." Users are urged to update immediately to mitigate risks. - Affected Devices: iPhone XS and later, iPad Pro 12.9-inch (3rd gen and later), iPad Pro 11-inch (1st gen and later), iPad Air (3rd gen and later), iPad (7th gen and later), iPad mini (5th gen and later). - Vulnerabilities: Two zero-days, including one related to WebKit (CVE-2025-14174), allowing potential remote code execution. - Recommendation: Check for updates in Settings > General > Software Update. Details on Google's Response Google patched its ninth zero-day vulnerability in Chrome for 2025, along with the December 2025 Android Security Bulletin, which addresses 107 vulnerabilities, including two high-severity flaws under active exploitation. This includes fixes for Android devices and Chrome browser. - Chrome Update: Version includes fixes for three zero-days, one high-severity with active exploits. - Android Bulletin: Patches for critical flaws in the framework and system components. - How to Update: For Chrome, go to Help > About Google Chrome. For Android, check Settings > Security > Google Play System Update. Impact and Recommendations These vulnerabilities were part of targeted attacks, potentially by state-sponsored actors, emphasizing the need for prompt updates. No widespread exploitation has been reported, but the risk remains high for unpatched devices. Users should: - Enable automatic updates where possible. - Avoid clicking suspicious links. - Monitor for unusual device behavior.

Discord and GeForce NOW: Revolutionizing In-App Gaming

Introduction to a New Gaming Era Discord, the go-to platform for gamers and communities worldwide, has taken a monumental step forward by integrating NVIDIA’s GeForce NOW cloud gaming service. Announced at Gamescom 2025, this partnership allows users to play high-quality games directly within Discord, eliminating the need for downloads, installations, or external launchers. With Fortnite as the flagship title for this rollout, the collaboration promises to redefine how gamers connect, play, and discover new titles, all within the familiar Discord interface. How It Works: Seamless Integration The integration leverages NVIDIA’s Graphics Delivery Network (GDN), enabling users to launch games instantly within Discord’s voice or text channels. During a closed-door demo at Gamescom 2025, attendees experienced Fortnite streaming at up to 1440p resolution and 60 frames per second (FPS), showcasing smooth performance even on low-spec devices. This feature is designed to make gaming more accessible, as players can jump into sessions without worrying about hardware limitations or lengthy setup processes. The system also supports cross-platform play, ensuring that friends on different devices can game together effortlessly. The Power Behind the Scenes: Blackwell Architecture At the core of this integration is NVIDIA’s cutting-edge Blackwell architecture, which powers the upgraded GeForce NOW service. Starting in September 2025, GeForce NOW will roll out RTX 5080-class GPUs, boasting 62 teraflops of compute power, a 48GB frame buffer, and 2TB/s of memory bandwidth. This upgrade enables streaming at resolutions up to 5K at 120 FPS, with a new Cinematic Quality Streaming (CQS) mode that enhances visual fidelity for an immersive experience. The architecture ensures low latency and high performance, making cloud gaming feel as responsive as local play. Enhancing Social Gaming Discord’s 2025 user data highlights the platform’s role in social gaming: 72% of its 150 million monthly active users play games with friends weekly, and group sessions last seven times longer than solo play. The GeForce NOW integration capitalizes on this by embedding gaming directly into Discord’s social framework. For example, users can initiate a 30-minute Fortnite trial within a voice channel, inviting friends to join instantly. This feature fosters spontaneous gaming sessions, making it easier for communities to connect over shared gaming experiences. Additionally, a limited-time free trial of GeForce NOW’s Performance tier will be offered, requiring only an Epic Games account to start playing, lowering the barrier to entry. Expanding the Game Library with Install-to-PlayOne of the standout features of this integration is the “Install-to-Play” functionality, which allows users to access their existing PC game libraries on GeForce NOW without re-installing titles for each session. This feature will double the GeForce NOW library to over 4,500 titles, including popular games from platforms like Steam, Epic Games Store, and Xbox. By saving game progress in the cloud, players can pick up where they left off, creating a seamless experience across devices. This is particularly appealing for Discord users who already manage their gaming communities and libraries within the platform. What’s Next: The Future of Discord Gaming While the Gamescom 2025 demo was a promising first look, NVIDIA and Discord have not yet announced an official release date for the full integration. However, the partnership is expected to evolve rapidly, with plans to support additional titles and features. The integration aligns with Discord’s broader mission to be more than just a communication tool, positioning it as a central hub for gaming, social interaction, and content discovery. Future updates may include deeper integration with Discord’s Activity and Shop features, allowing users to discover and purchase games directly within the app. Challenges and Considerations Despite the excitement, there are challenges to address. The success of this integration depends on reliable internet connections, as cloud gaming requires stable, high-speed bandwidth to minimize latency. Additionally, while the free trial makes the service accessible, the full GeForce NOW experience may require a paid subscription for extended playtime or premium features, details of which are yet to be disclosed. Discord and NVIDIA will need to ensure that the user experience remains intuitive and inclusive to maintain the platform’s broad appeal. Conclusion The partnership between Discord and GeForce NOW marks a significant milestone in the evolution of cloud gaming. By combining Discord’s social platform with NVIDIA’s powerful cloud infrastructure, this integration offers a glimpse into the future of gaming, where accessibility, performance, and community converge. As the rollout progresses, gamers can look forward to a more connected and seamless experience, with Discord poised to become a one-stop destination for both communication and play.

The quickest way to fix Getsockopt Minecraft error

The "Connection Timed Out: getsockopt" error in Minecraft is a common network-related issue that prevents players from connecting to servers or LAN games. This guide provides step-by-step solutions to resolve the error, focusing on firewall settings, network configurations, and other troubleshooting methods. Follow these instructions to get back to playing Minecraft seamlessly. Understanding the Getsockopt Error The getsockopt error occurs when Minecraft fails to establish a connection with a server due to network issues, such as firewall restrictions, misconfigured ports, or DNS problems. It's often accompanied by the message "Connection Timed Out: getsockopt". This error typically arises in Windows environments and is related to Java executable prompts being blocked by security settings. Quick Fixes to Try First Before diving into advanced solutions, try these simple steps to resolve the issue quickly. Restart Minecraft and Your Network 1. Close Minecraft completely and relaunch it. 2. Check your internet connection by loading a website or playing another online game. 3. Restart your router: Unplug it for 30 seconds, then plug it back in. Ensure Matching Game Versions 1. Confirm that you and your friends are using the same Minecraft version. 2. For LAN games, ensure all players are on the same network. Adjusting Firewall Settings The most common cause of the getsockopt error is Windows Defender Firewall blocking Minecraft’s Java executable. Follow these steps to allow Minecraft through the firewall. Allow Minecraft and Java Through Firewall 1. Open the Start menu and search for Windows Defender Firewall. 2. Select Allow an app or feature through Windows Defender Firewall. 3. Click Change Settings (admin privileges required). 4. Locate Java-related entries like Javaw.exe or Java Platform SE Binary. Check both Private and Public boxes next to them. 5. If Minecraft isn’t listed, click Allow another app, then Browse, and add Minecraft.exe and MinecraftLauncher.exe. 6. Save changes and test the connection. Note: Turning off the firewall entirely is not recommended, as it leaves your system vulnerable. Allowing specific apps is safer. Exclude Minecraft from Windows Defender For Windows 10/11 users, excluding Minecraft from Windows Defender can help. 1. Go to Start > Settings > Update & Security > Windows Security > Virus & Threat Protection. 2. Under Virus & Threat Protection Settings, click Manage Settings. 3. Scroll to Exclusions and select Add or remove exclusions. 4. Add the Minecraft installation folder. 5. Test the connection again. Configuring Network Settings If firewall adjustments don’t work, network misconfigurations may be the culprit. Try these solutions. Change DNS Settings Switching to Google’s public DNS can improve connection stability. 1. Open Control Panel > Network and Internet > Network and Sharing Center. 2. Click Change adapter settings. 3. Right-click your active connection (Ethernet or Wi-Fi) and select Properties. 4. Select Internet Protocol Version 4 (TCP/IPv4) and click Properties. 5. Set the following DNS servers: - Preferred DNS: 8.8.8.8 - Alternate DNS: 8.8.4.4 6. Save changes and restart your computer. Set Up Port Forwarding If you’re hosting a server, ensure port 25565 is open. 1. Log into your router (usually via 192.168.1.1 or 192.168.0.1). 2. Find the Port Forwarding or Virtual Servers section. 3. Add a new rule: - Service Port: 25565 - Internal Port: 25565 - IP Address: Your computer’s local IP (find it using ipconfig in Command Prompt). - Protocol: TCP/UDP 4. Save and restart your router. 5. Verify the port is open using tools like netstat or online port checkers. Advanced Troubleshooting If the error persists, try these additional steps. Check for Antivirus Interference Antivirus software may block Minecraft’s connections. 1. Temporarily disable your antivirus or add Minecraft and Java to its exceptions list. 2. If the error resolves, configure your antivirus to allow Minecraft.exe and Javaw.exe permanently. Update Java and Minecraft 1. Ensure you have the latest Java version (e.g., Java 21 for Minecraft 1.20.4+). Download it from java.com. 2. Update Minecraft to the latest version via the launcher. Check Server Status 1. If connecting to a public server, check if it’s online using sites like minecraftstatus.net. 2. For hosted servers, confirm the server is running and not in maintenance. Contact the host’s support if needed. When to Contact Support If none of the above solutions work: 1. File a bug report on Mojang’s Bug Tracker at bugs.mojang.com. 2. Contact Mojang Support via the official Minecraft website. 3. For third-party servers, reach out to the server admin or hosting provider. Conclusion The getsockopt error in Minecraft is typically caused by firewall blocks, network misconfigurations, or outdated software. Start with firewall adjustments, as they resolve the issue for most players. If that fails, systematically try DNS changes, port forwarding, and advanced troubleshooting. With these steps, you’ll likely be back in your Minecraft world in no time. Happy gaming!

The quickest way to fix React CORS errors

Introduction Cross-Origin Resource Sharing (CORS) errors occur in React applications when a browser blocks requests to a different domain, protocol, or port due to security policies. The error typically appears as "No 'Access-Control-Allow-Origin' header is present on the requested resource." This article details how to diagnose and resolve CORS issues in React apps, with practical solutions for development and production environments. Understanding CORS in React CORS errors arise when a React frontend (e.g., running on localhost:3000) tries to fetch data from an API on a different origin (e.g., api.example.com). The browser enforces the Same-Origin Policy, requiring the server to include specific headers to allow cross-origin requests. Causes of CORS Errors Server does not include Access-Control-Allow-Origin header for the client's domain. Mismatched protocols (e.g., HTTP frontend requesting HTTPS API). Local development requests hitting a production server. ncorrectly configured API endpoints or headers. Solutions for CORS Errors in React Server-Side Configuration The most reliable fix is to configure the server to allow requests from your React app's origin. Steps: For Node.js/Express servers, install the cors package: npm install cors Add middleware to allow specific origins: const cors = require('cors'); app.use(cors({ origin: 'http://localhost:3000' })); For production, update the origin to your deployed frontend URL (e.g., 'https://your-app.com'). Verify headers using browser DevTools (Network tab) to ensure Access-Control-Allow-Origin matches your frontend's origin. For APIs you don't control, check their documentation for CORS support or contact the provider. Proxy Setup in Development React's development server supports proxying to bypass CORS during development. Steps: Install http-proxy-middleware: npm install http-proxy-middleware Create src/setupProxy.js in your React project: const { createProxyMiddleware } = require('http-proxy-middleware'); module.exports = function(app) { app.use( '/api', createProxyMiddleware({ target: 'https://api.example.com', changeOrigin: true, }) ); }; Update API calls to use relative paths (e.g., fetch('/api/data') instead of fetch('https://api.example.com/data')). Restart the development server to apply changes. Note: This is a development-only solution and does not work in production. Using Fetch with CORS Mode Ensure your Fetch requests in React are configured correctly. Steps: Add { mode: 'cors' } to Fetch options: fetch('https://api.example.com/data', { mode: 'cors' }) .then(response => response.json()) .then(data => console.log(data)) .catch(error => console.error('CORS error:', error)); If the server doesn't support CORS, this won't resolve the issue, but it ensures the request signals CORS intent. For POST requests, include headers like Content-Type: 'application/json' and verify server accepts them via Access-Control-Allow-Methods. JSONP as a Fallback (Limited Use) For GET requests to APIs lacking CORS support, JSONP can be a workaround, though it's outdated and less secure. Steps: Use a library like jsonp: npm install jsonp Example: import jsonp from 'jsonp'; jsonp('https://api.example.com/data?callback=?', (err, data) => { if (err) console.error(err); else console.log(data); }); Avoid for sensitive data due to security risks (e.g., script injection). Only use when other solutions are not feasible. Handling Errors Gracefully Improve user experience by catching and handling CORS errors in your React app. Steps: Wrap API calls in try-catch: async function fetchData() { try { const response = await fetch('https://api.example.com/data'); if (!response.ok) throw new Error('Network response was not ok'); const data = await response.json(); return data; } catch (error) { console.error('CORS or network error:', error); alert('Failed to fetch data. Please try again later.'); } } Display user-friendly messages using components like Toast or Modal from libraries like Material-UI. Log errors to monitoring tools like Sentry for debugging. Testing and Debugging Open DevTools > Network tab, reproduce the request, and check for CORS-related headers or errors. Use tools like Postman to test API endpoints independently. If the API works in Postman but fails in the browser, confirm CORS headers are correctly set. Test in different browsers, as some (e.g., Firefox) provide clearer CORS error messages. Production Considerations Deploy your frontend and backend on the same domain (e.g., app.example.com and api.example.com) to minimize CORS issues. Use a reverse proxy (e.g., Nginx) to route requests under the same origin. Ensure HTTPS is enabled for both frontend and backend to avoid mixed content errors. Update CORS headers dynamically for multiple allowed origins in production: app.use(cors({ origin: (origin, callback) => { const allowedOrigins = ; if (!origin || allowedOrigins.includes(origin)) { callback(null, true); } else { callback(new Error('Not allowed by CORS')); } }})); Best Practices Always configure CORS on the server when possible, as client-side workarounds are less reliable. Use environment variables for API URLs to switch between development and production easily. Monitor CORS errors in production with tools like Sentry or LogRocket. Write unit tests for API calls using Jest and msw to mock responses and test error handling. Educate users with clear error messages instead of letting requests fail silently. Conclusion CORS errors in React can be resolved by configuring the server, using proxies in development, or handling errors gracefully. By combining server-side fixes with robust client-side error handling, you can ensure a seamless user experience. Regular testing and monitoring are essential to catch and fix issues early.

Exploring GPT-OSS:OpenAI's Open-Weight Language Model

The release of GPT-OSS by OpenAI represents a pivotal moment in the democratization of artificial intelligence. By offering open-weight models, OpenAI enables developers, researchers, and organizations to harness the power of advanced language models without relying on proprietary APIs. This article delves into the GPT-OSS family, exploring its architecture, performance, deployment options, use cases, and ethical considerations. The GPT-OSS series includes two models: gpt-oss-120b with 117 billion parameters and gpt-oss-20b with 21 billion parameters. These models are designed for tasks ranging from complex reasoning to efficient inference on edge devices, making them versatile tools for both enterprise and individual use. What is GPT-OSS? Background and Purpose GPT-OSS, short for Open Source Software, marks OpenAIs return to open-weight models since the release of GPT-2 in 2019. Released under the Apache 2.0 license, these models, gpt-oss-120b and gpt-oss-20b, are designed to support a wide range of applications, including natural language processing, code generation, and agentic workflows. Unlike fully open-source models, GPT-OSS provides access to model weights, allowing for local deployment and customization while maintaining certain restrictions on commercial use. The release aims to foster innovation by providing researchers and developers with tools to experiment, fine-tune, and deploy AI models without the constraints of cloud-based APIs. Key Features and Capabilities Reasoning Capabilities: Both models support chain-of-thought reasoning with configurable effort levels (low, medium, high), allowing users to balance computational cost and response quality. Mixture-of-Experts Architecture: gpt-oss-120b activates 5.1 billion parameters per token across 128 experts, while gpt-oss-20b activates 3.6 billion, ensuring efficiency without sacrificing performance. Extended Context Window: A 128,000-token context window enables processing of long documents, conversations, or codebases. Quantization Support: Native MXFP4 quantization allows gpt-oss-120b to run on a single 80GB GPU and gpt-oss-20b on devices with as little as 16GB RAM, making it accessible for consumer hardware. Multimodal Potential: While primarily text-based, the models include hooks for future multimodal extensions, such as image processing. Technical Architecture Model Design Both GPT-OSS models are built on a transformer architecture with a Mixture-of-Experts framework. They incorporate alternating dense and sparse attention layers, similar to GPT-3, but optimized for efficiency. The gpt-oss-120b model features 36 layers, 128 experts, and 4 active experts per token, earning the moniker super sparse. The gpt-oss-20b model is leaner, with 24 layers and 64 experts, designed for resource-constrained environments. Both models use Rotary Positional Embedding to support the 128,000-token context window and grouped multi-query attention to reduce memory overhead during inference. Performance Benchmarks gpt-oss-120b: Competes closely with OpenAIs proprietary o4-mini on benchmarks like Codeforces (coding), MMLU (general knowledge), and TauBench (reasoning). It excels in specialized domains, scoring 92 percent on HealthBench for medical queries and outperforming competitors on AIME 2024 and 2025 math problems. gpt-oss-20b: Matches or surpasses o3-mini on similar benchmarks, achieving 85 percent on HealthBench and strong performance on math and coding tasks. Its optimization for edge devices makes it ideal for lightweight applications. These benchmarks highlight the models ability to handle complex tasks while remaining resource-efficient. Deployment Options Local Deployment Running GPT-OSS locally is straightforward with tools like Ollama, LM Studio, or Hugging Faces transformers library. For gpt-oss-20b, use commands to pull and run the model. For gpt-oss-120b, a single H100 GPU or equivalent is required with commands to download and execute the model. Local deployment ensures data privacy and eliminates reliance on cloud services, ideal for sensitive applications. Cloud Deployment Cloud providers like Azure, AWS, and Northflank support GPT-OSS for scalable inference. A sample setup on Northflank involves serving the model with tensor parallelism for high-throughput applications, such as real-time chatbots or automated content generation. For API-based access, xAI offers an API service for GPT-OSS. Visit https://x.ai/api for details. Use Cases and Applications Research and Development Researchers can use GPT-OSS to explore novel AI architectures, fine-tune models for domain-specific tasks, or benchmark against proprietary systems. The open-weight nature allows full access to model parameters, enabling experiments in areas like transfer learning or reinforcement learning. Enterprise Applications Businesses can deploy GPT-OSS for tasks like automated customer support, document summarization, or code review. For example, a company could fine-tune gpt-oss-20b to generate technical documentation from codebases, running it on-premises to ensure data security. Edge Computing The gpt-oss-20b models low resource requirements make it suitable for edge devices, such as IoT systems or mobile applications. For instance, it can power offline chatbots or real-time translation apps on smartphones. Education and Training Educational institutions can leverage GPT-OSS for teaching AI concepts, developing interactive learning tools, or creating personalized tutoring systems. The models ability to handle complex reasoning makes it ideal for generating practice problems or explaining concepts in subjects like mathematics or computer science. Safety and Ethical Considerations Safety Measures OpenAI evaluated gpt-oss-120b under its Preparedness Framework, confirming it does not reach high-risk capability levels in domains like biological, chemical, or cyber threats. However, developers are responsible for implementing safeguards, such as output filtering or user authentication, to prevent misuse in production environments. Fine-Tuning and Customization Fine-tuning is supported for both models. For gpt-oss-20b, consumer hardware can handle fine-tuning with libraries like transformers. For gpt-oss-120b, fine-tuning requires high-end GPUs or cloud infrastructure due to its size. Fine-tuning enables customization for specific domains, such as legal document analysis or medical diagnostics, but developers must ensure ethical use. Ethical Implications The open-weight nature of GPT-OSS raises concerns about potential misuse, such as generating misinformation or malicious code. OpenAI encourages responsible use through community guidelines and recommends monitoring outputs in sensitive applications. Developers should consider ethical implications, such as bias in training data or environmental impact from high-compute deployments. Transparency is key. Developers should disclose when GPT-OSS is used in public-facing applications to maintain trust and accountability. Future Directions Potential Upgrades OpenAI has hinted at future enhancements to GPT-OSS, including multimodal capabilities for processing images or audio and improved quantization for even lower resource requirements. These upgrades could expand the models applicability to fields like computer vision or real-time speech processing. Community Contributions As open-weight models, GPT-OSS benefits from community-driven development. Researchers and developers can contribute to optimizing inference, creating new fine-tuning datasets, or building tools to simplify deployment. OpenAIs GitHub repository for GPT-OSS encourages collaboration under the Apache 2.0 license. Conclusion GPT-OSS empowers the AI community with flexible, high-performance models that bridge the gap between proprietary and open systems. Whether for research, enterprise applications, edge computing, or education, gpt-oss-120b and gpt-oss-20b offer robust solutions for a wide range of tasks. Their open-weight design fosters innovation while requiring responsible stewardship to mitigate risks. For more information on deployment or API access, visit https://x.ai/api.

.png&w=3840&q=75)

Fixing "Cannot Find Module" on Vercel

When deploying your Node.js or Next.js project to Vercel, you may encounter the error: Error: Cannot find module 'your-module-name' This usually means that Vercel couldn't resolve a module you used in your code. This article covers the common causes and how to fix them. 1. Check Module Installation Ensure You Installed the Module If you're using a third-party package (like axios, lodash, etc.), make sure it's installed in your project: npm install your-module-name or yarn add your-module-name Check Your package.json The module must be listed under dependencies, not just devDependencies, especially if it's used in server-side code: "dependencies": { "your-module-name": "^1.0.0" } 2. Re-deploy After Lockfile Update Push Your package-lock.json or yarn.lock Make sure your lockfile is committed to Git. Vercel uses this to install your exact dependencies. If the file is missing or outdated, it may not install the required modules correctly. git add package-lock.json git commit -m "Update lockfile" git push Trigger a Fresh Deploy Go to your Vercel dashboard and trigger a redeploy to apply changes. 3. Check for Case-Sensitive Imports File/Folder Name Mismatch Vercel runs on a Linux-based file system, which is case-sensitive. This means: import myModule from './Utils/MyModule'; will fail if the actual file is named ./utils/myModule.ts. Double-check your casing. 4. Module Path Issues with Monorepos Use baseUrl or paths Correctly If you're using a monorepo or custom module resolution, make sure your tsconfig.json (or jsconfig.json) is correctly configured and that Vercel supports it. Use Relative Paths If Possible To avoid issues, prefer relative paths over custom aliases unless necessary. 5. Vercel Build Settings Custom Install Command In your Vercel project settings, you can set a custom install command under the Build & Development Settings. Ensure it runs npm install or yarn install properly. Set NODE_ENV If Needed Some modules behave differently based on NODE_ENV. Ensure your environment variables are set correctly in the Vercel dashboard. Conclusion The "Cannot find module" error on Vercel is usually related to dependency installation, path resolution, or case sensitivity. By carefully checking these areas, you can ensure a smooth deployment. If the error persists, consult Vercel logs for the full stack trace, or test your build locally using: vercel build to reproduce the issue before deploying.

iPhone 17 Release: What We Know So Far

Apple’s iPhone 17 series is generating significant buzz as its anticipated launch approaches. Expected to debut in September 2025, the lineup is rumored to include four models: iPhone 17, iPhone 17 Air, iPhone 17 Pro, and iPhone 17 Pro Max. Below is a comprehensive overview of the expected release details, based on recent leaks and Apple’s historical patterns. Release Timeline Expected Announcement and Launch Apple traditionally unveils its iPhone lineup in the second week of September, typically on a Tuesday or Wednesday. Industry insiders, including Bloomberg’s Mark Gurman, suggest the iPhone 17 series will follow this schedule, with a keynote event likely on September 9 or 10, 2025. Preorders are expected to begin on Friday, September 12, with general availability starting on Friday, September 19, 2025. Potential Delays While no delays have been confirmed, past launches like the iPhone X and XR saw staggered releases due to production challenges. The introduction of the ultra-thin iPhone 17 Air could face similar hurdles, though current reports suggest all models will launch simultaneously. Key Features and Models iPhone 17 Lineup The iPhone 17 series is expected to include: iPhone 17: A base model with a 6.3-inch OLED display, A19 chip, and 8GB RAM. iPhone 17 Air: A new ultra-thin model (5.5–6.25mm thick) with a 6.6-inch OLED display, A19 chip, and a single 48MP rear camera. iPhone 17 Pro: Featuring a 6.3-inch display, A19 Pro chip, 12GB RAM, and a triple-camera system with a 48MP telephoto lens. iPhone 17 Pro Max: The flagship with a 6.9-inch display, A19 Pro chip, 12GB RAM, and a potential 5,000mAh battery. The “Plus” model is reportedly being replaced by the iPhone 17 Air, which prioritizes a slim design over battery capacity. Design and Camera Upgrades The iPhone 17 Air is set to be Apple’s thinnest iPhone ever, potentially as slim as 5.5mm, compared to the iPhone 6’s 6.9mm. The Pro models may feature a part-aluminum, part-glass design for durability and a redesigned horizontal camera bar. All models are expected to have a 24MP front camera, with the Pro models boasting a 48MP telephoto lens and possible 8K video recording. Pricing and Market Factors Expected Pricing Pricing remains speculative, but leaks suggest: iPhone 17: ~$799 iPhone 17 Air: ~$899 iPhone 17 Pro: ~$1,099 iPhone 17 Pro Max: ~$1,199 Reports indicate potential price hikes due to increased production costs and U.S. tariffs, though local assembly in India may stabilize prices in some markets like Japan. Impact of Tariffs U.S. tariffs, recently set at 55% for imports from China, could affect pricing, though Apple’s shift to manufacturing in India may mitigate this. The Wall Street Journal notes Apple may attribute price increases to enhanced features to avoid tariff-related backlash. Conclusion The iPhone 17 series, expected to launch on September 19, 2025, promises significant upgrades, including a sleek iPhone 17 Air, enhanced cameras, and powerful A19 chips. While pricing may rise due to tariffs and production costs, Apple’s consistent release schedule and innovative features make this lineup highly anticipated. Stay tuned for official announcements as September approaches.

Google's AI-Powered Business Calling Feature

Overview of the Feature Functionality and Purpose On July 17, 2025, Google introduced an AI-powered business calling feature for U.S. users, designed to enhance the Google Search experience. This feature enables an AI agent to make phone calls to local businesses on behalf of users to retrieve specific information, such as store hours, product availability, or service details. The AI summarizes the call data and presents it to the user, streamlining the process of gathering real-time business information without requiring direct user interaction. Technology Behind the Feature The business calling feature leverages Google’s advanced AI models, likely built on its Gemini framework or related technologies, to handle natural language processing and automated call interactions. This allows the AI to engage in human-like conversations with business representatives, ensuring accurate and relevant responses. The feature integrates seamlessly with Google Search, making it accessible via mobile or desktop queries. Impact and Implications Benefits for Users and Businesses For users, this feature saves time and simplifies the process of finding up-to-date business information, particularly for small or local establishments. Businesses may see increased visibility and customer engagement through Google Search, as the AI-driven queries could drive more traffic to their services. The feature is currently available only in the U.S., with potential for global expansion based on its success. Privacy and Ethical Considerations Google has emphasized that the feature prioritizes user privacy, with calls made anonymously and no personal user data shared with businesses. However, concerns about AI-driven automation replacing human interactions and the ethical implications of AI making unsolicited calls may arise. Google has not yet detailed how it will address potential misuse or business opt-out preferences. Future Developments Integration with Other Google Services The AI calling feature could potentially integrate with Google Maps, Google Assistant, or Google Business Profiles, further enhancing its utility for navigation and local discovery. Future updates may include multilingual support or expanded query types, such as booking appointments or checking wait times. Competitive Landscape This feature positions Google ahead of competitors like Amazon’s Alexa or Apple’s Siri in the realm of AI-driven local search. By combining AI with real-time business interactions, Google strengthens its dominance in search and local services, potentially challenging traditional directory services and customer support systems.

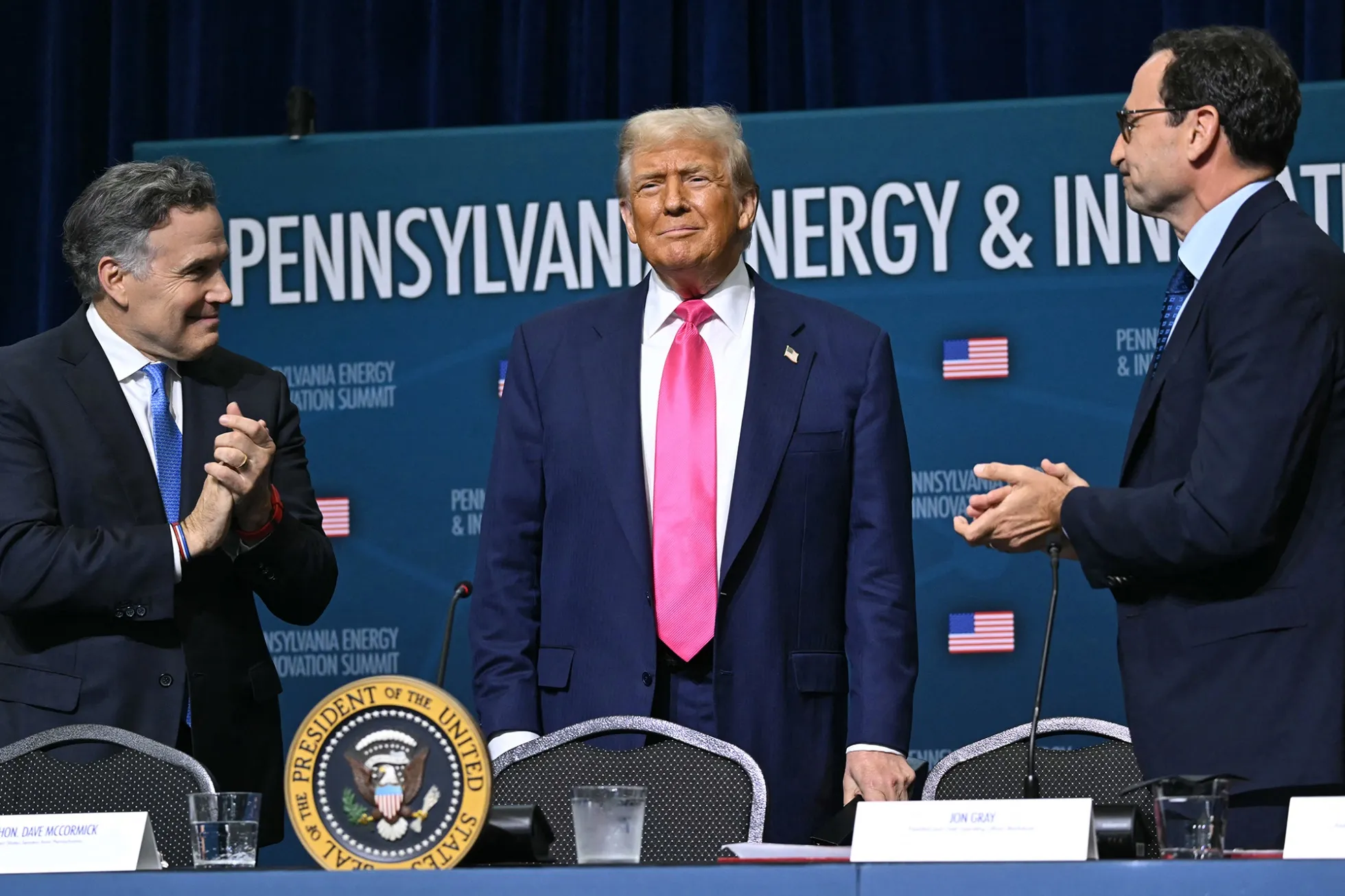

Trump Administration Bolsters AI with Massive Investments

On July 16, 2025, the Trump administration announced significant strides in advancing artificial intelligence (AI) and technology through substantial investments, positioning the United States as a leader in innovation. These efforts, highlighted during a recent Tech and AI Summit, underscore a strategic focus on economic growth and technological dominance. Historic Investment in Pennsylvania At the Tech and AI Summit, President Trump unveiled a $90 billion investment plan targeting Pennsylvania’s energy and innovation sectors. This initiative aims to create jobs and strengthen the state’s role as a hub for technological advancement. The investment is expected to catalyze advancements in AI infrastructure, energy efficiency, and cutting-edge research, fostering a robust ecosystem for tech-driven economic growth. Amazon’s $20 Billion Data Center Commitment A key highlight of the summit was Amazon’s announcement of a $20 billion investment in Pennsylvania for new data centers. These facilities will support AI-driven cloud computing and machine learning operations, reinforcing the state’s growing reputation as a technology powerhouse. The data centers are anticipated to create high-skill jobs and enhance the region’s capacity to handle the increasing demand for AI processing power. Pentagon’s AI Integration The Trump administration is also prioritizing AI integration within the Pentagon, with plans to deploy AI-powered bots for enhanced operational efficiency. This move signals a broader commitment to leveraging AI for national security, streamlining defense processes, and maintaining a competitive edge in global military technology. Future Implications for AI Leadership These investments reflect the administration’s proactive stance on ensuring the U.S. remains at the forefront of AI and technology innovation. By fostering partnerships with industry leaders like Amazon and prioritizing AI in defense, the Trump administration aims to drive economic growth while addressing strategic challenges in a rapidly evolving global tech landscape. The initiatives announced on July 16, 2025, mark a pivotal moment for the U.S. technology sector, with Pennsylvania poised to become a central player in the nation’s AI-driven future.

Major Layoffs Continue at Big Tech Amid U.S. Tech Restructuring

Microsoft: Another Round of ~9,000 Job Cuts Microsoft has recently laid off approximately 9,000 employees-nearly 4 % of its global workforce (~228,000 employees)-in its largest workforce reduction in over two years. The cuts target multiple divisions including Xbox and sales teams, following earlier reductions of over 6,000 employees in May. The company is restructuring to control costs amid massive AI infrastructure investments, reportedly totaling up to $80 billion in 2025 Intel: Global Workforce Cut of 15–20% Under new CEO Lip‑Bu Tan, Intel is executing one of the largest layoffs in its history, planning to eliminate 15 % - 20 % of its workforce - over 21,000 jobs globally from a headcount of around 108,900. This includes major reductions across the Foundry and manufacturing divisions, impacting offices in Austin, Oregon, Santa Clara, Chandler, and Israel. Cuts include 110 jobs in Austin, 529 in Oregon, 410+ in Santa Clara, and executive-level positions. Intel has also confirmed closure of its automotive chip unit, shifting focus to core client and data-center segments as part of broader restructuring. Meta: Continued Performance-Based Reduction Meta has continued its downsizing by focusing on performance-based cuts, reportedly reducing around 5% of its workforce in early 2025. These layoffs are part of an ongoing effort to refocus on AI, wearable devices (like smart glasses), and core social media platforms. The company had already cut over 21,000 roles since 2022 and remains committed to aligning staffing with AI-led strategy. Looking Ahead While job losses may continue through 2025, many firms are also hiring aggressively in AI, cloud computing, and data infrastructure. This signals a shift in talent demand rather than overall contraction. Workers and organizations alike must adapt rapidly to this new reality, where resilience, upskilling, and adaptability define long-term success.

NVIDIA Stock Surges 15% in a Month: What’s Fueling the Rally?

NVIDIA has seen an impressive run over the past month. From around $142 in early June to over $164 in early July, the stock climbed nearly 15%, reinforcing its role as a major beneficiary of the ongoing AI boom. Key drivers behind the rally 1. Strong Q1 FY2025 Earnings In its fiscal Q1 earnings report (April–June 2025), NVIDIA reported a 69.2% year-over-year revenue increase, totaling around $44 billion. The data center segment alone contributed approximately $39.1 billion, driven by soaring demand for AI infrastructure and high-performance computing (HPC). 2. Dominance in AI Hardware NVIDIA continues to dominate the GPU market for AI workloads, particularly with the rollout of its Blackwell platform. Cloud providers, enterprise customers, and governments are all aggressively scaling their AI infrastructure, with NVIDIA at the center of this transformation. 3. Soaring Market Capitalization In early July, NVIDIA briefly surpassed Microsoft to become the most valuable company in the world, hitting a market cap of $3.86 trillion. As of July 9, it’s approaching the $4 trillion milestone, a level no public company has ever reached. 4. Analyst Upgrades Several analysts have raised their price targets for NVIDIA. Citi, for example, increased its target from $180 to $190, citing strong demand and improved gross margins. This has added positive sentiment among retail and institutional investors alike. Final Thoughts NVIDIA’s recent performance is more than just a rally - it’s a reflection of the accelerating shift toward AI-driven computing. While short-term corrections are possible, the company's strong fundamentals, product leadership, and market dominance suggest continued growth potential. As always, investors should keep an eye on macroeconomic trends and regulatory developments, but the current trajectory for NVIDIA remains highly promising.

Articles (11)

- • Google and Apple Emergency Security Updates

- • Discord and GeForce NOW: Revolutionizing In-App Gaming

- • The quickest way to fix Getsockopt Minecraft error

- • The quickest way to fix React CORS errors

- • Exploring GPT-OSS:OpenAI's Open-Weight Language Model

- • Fixing "Cannot Find Module" on Vercel

- • iPhone 17 Release: What We Know So Far

- • Google's AI-Powered Business Calling Feature

- • Trump Administration Bolsters AI with Massive Investments

- • Major Layoffs Continue at Big Tech Amid U.S. Tech Restructuring

- • NVIDIA Stock Surges 15% in a Month: What’s Fueling the Rally?